Artificial Intelligence (AI) is kind of the “next big thing”. Everyone seems to be talking about it.

But is it a big deal? Is it dangerous? What does God think about it? What does AI think about God? In this post I’ll try to answer some of these questions.

I wrote this post myself. I am certainly not an expert on any of this but I have tried to understand what the experts are saying (see references at the end). If I had asked an AI chatbot to write it, I wonder would you be able to tell the difference? Would it be better?

The robot that enjoys human appreciation

Eve Poole tells the story of some AI researchers who built a small four-legged robot and gave it a small AI engine that was capable of learning. They watched it stand around for a few days wiggling its legs until it learnt to control the legs so it could walk.

To test how well it could re-program itself if it was damaged, they removed one of its legs and waited to see what would happen. Sure enough, within a couple of days it had worked out how to walk with just three legs.

So they examined the way it had programmed itself, and found to their surprise that one of the functions that had helped it to walk was looking at the faces of the watching researchers. It seems that human approval was one part of the algorithm for deciding which behaviours were helpful and should be developeed further.

So was the robot conscious? Was something as intangible as the expression on a human face actually useful in what is otherwise a science-heavy enterprise?

Let’s dive very briefly into the world of artificial intelligence (AI).

Robots, computers and AI

Computers and robotics have been with us for decades. Computers are basically machines that store and process data. They can follow a set of steps (the software) to produce a desired result, like me typing this page. These days there are simple computers in cars, phones, TVs and some children’s toys; the tasks they can perform may be very limited but they are still computers (or microprocessors).

Robots are machines that perform repetitive tasks more accurately and quickly than humans, for example, in automotive or other manufacturing, or in surgery, or in situations hazardous to humans, e.g. defusing bombs. These robots would have a computer as a core component.

These computers and robots work according to algorithms (processes or rules that are designed to reach a particular end result). If the process is repeated, the same result will be produced. If the programme includes bugs, the result may be quite wrong.

Artificial intelligence is making machines that can think like humans. Like humans, an AI bot can learn from its mistakes. Run the same process several times and the result won’t necessarily be the same, but may be improved.

The two types of robots can be classified as “deterministic” (the algorithms used are determined by the programmer) and “cognitive” (the programmed algorithms can be modified to learn from experience).

For more on how AI works and what it does, see A nerd’s guide to AI below.

Who’s afraid of AI?

Many people have concerns, or even fears, about the possible negative impact of widespread use of AI. There are four broad areas of concern.

1. Safe and reliable?

Some forms of AI (see A nerd’s guide to AI) arrive at conclusions via processes which are not readily known to the human programmers. This means it is always the possibility of an unpredictable and possibly harmful outcome.

The most obvious example is driverless cars. Could they cause an accident due to the unpredictability of the algorithms they use? Could someone hack into a car’s computer system to deliberately cause an accident without being discovered? Proponents of driverless cars point out that human unpredictability and inattention are far more likely to cause an accident than AI error, but the concerns remain. I think many people feel being killed by a human driver is more acceptable than being killed by an AI “driver”!

Another fear is that AI might produce outcomes that are biased against some minority group or unfair towards them simply because the training of the AI system hasn’t sufficiently included that group

2. Losing control?

Because an AI model is like a black box and unpredictable, there is a fear that humans may lose control. Perhaps the AI robot can alter its code and re-program itself. Perhaps it could become really powerful and go rogue. Could the machines even take over and make humans their servants?

It’s the stuff of science fiction, but if AI machines are now smarter (in some sense) than humans, who can be sure it can’t happen? What if the AI algorithms develop values in robots that don’t align with human values?

Eve Poole suggests that we need to take steps to mitigate these risks. If the human brain is the inspiration for neural networks, then why not build other human qualities into AI? After all, she says, if evolution or God have given us these qualities, perhaps they would be useful in robots. She suggests the following qualities might help build community, mutual help, altruism and shared values:

- Emotions, to help build empathy and strengthen positive values towards others.

- Intuition, to give deeper insights into how others feel.

- Uncertainty, to cause AI models to pause and consider if they may be wrong.

- Conscience, to reinforce the value of not hurting others.

- Meaning making & story-telling, often the best way to transmit shared values.

I’m not sure how this might be done, and I’m equally unsure whether values like these are already part of the algorithms that AI models have developed, but it’s food for thought.

3. Used for evil?

Most human tools and inventions can be used for both evil and good. It is very reasonable to be concerned about how AI can be used to do evil, for example ….

- Faking identity. AI could be used to fake almost any aspect of a person’s identity, including “deepfake” videos and voices, providing opportunities for identity theft or to defraud and steal.

- Misinformation can be generated as easily as truthful information.

- Propaganda chatbots. People can be fooled into thinking they are having an online conversation with a real person, when it is simply a chatbot spreading misinformation or seeking information to use against them.

- Manipulative advertising or political messages. AI can be used to learn your behaviour, your likes and dislikes, and other personal information, then use this to address you with personalised messages to influence your political or buying behaviour.

A lot of this is happening now, and can only become more effective and pervasive, unless something is done to prevent it or reduce it.

4. Losing humanity?

Studies show that people may be hurt by even quasi-legitimate use of AI.

- They may fear losing their jobs by being replaced by an AI robot.

- People fear that AI identity theft may take something away from their own identity and self perception.

- AI-generated personas (“digital clones”) can be used to fool friends on social media to achieve some nefarious end, and this may harm relationships if people don’t realise it is an AI clone messaging them, and not their friend.

Is AI conscious?

Consciousness is one of the big mysteries of humanity and science. We all experience it but science finds it hard to explain. There are many theories of consciousness, often based on philosophical worldviews. Some scientists even say the self and consciousness are an illusion.

But we can probably mostly agree that consciousness means being aware of oneself and one’s surroundings, to imagine oneself in the future and to be able to make choices. It probably includes having feelings and being able to enter into some kind of personal relationship with another conscious being.

So are AI machines conscious, or could they become conscious in the future?

A more traditional, and often Christian, view would be that only humans are fully conscious, some animals have a lower form of consciousness, but machines definitely cannot. Only humans have souls, only humans have genuine free will.

But others are not so sure.

- Eve Poole, writing from a Christian perspective, thinks AI machines are getting close to being autonomous and should be programmed to develop human like capacities such as emotions and conscience.

- Others believe humans don’t have free will anyway, consciousness and the self are illusions, so there is much less of a difference between a human and an AI robot.

- Others hope to create conscious robots, and are less interested in the exact definition of consciousness.

Tests for human consciousness

The classic test for computer consciousness is the Turing text, first proposed by mathematician and World War 2 code breaker, Alan Turing. Could a person have a conversation with a chatbot and not be able to tell it wasn’t human? On that test, AI is just about there.

But it seems that Turing didn’t really propose this as a test of machine intelligence, rather he was making the point that we could build a machine that would pass this test but that wouldn’t necessarily make it intelligent, let alone conscious. Many others have adapted the Turing test, but the results still don’t seem to be satisfactory.

Out of this has come the Ethical Turing test where AI decisions are tested for their ethics.

Theologian Karl Barth suggested criteria for a genuine relationship (arguably one aspect of consciousness): “you can look the other in the eye, you can speak to and hear the other, you aid the other, and you do all of this gladly.” Clearly on these criteria, robots have a long way to go. But how would we test whether a robot acted “gladly”?

More recently, a bunch of scientists and philosophers investigated this question, and came up with 14 criteria that might indicate consciousness. They based these criteria on current theories of consciousness which had to be based on current neuroscientific data. This makes sense – except if you think that science isn’t a good tool for measuring something so subjective. They concluded that no current AI models come close to being conscious. But it seems they believe many of the criteria could be coded into the AI algorithms, which seems to me to show that the criteria aren’t sufficient to identify consciousness.

But what if …. ?

But who knows? Maybe humans are capable of creating a machine that is sentient. If it is possible, should such a machine be given some rights analagous to human rights? Eve Poole for one thinks so, as part of a process to hold AI models and robots to account.

Could an AI robot be sentient enough to suffer? What responsibility would we then have towards them (similar to our responsibility not to treat animals cruelly)?

There is a whole field of robot ethics where these and related questions are being considered.

AI and God

Some people think that AI models could become so powerful, they could become like gods – or at least people may treat them as gods or a higher power. After all, they may become extremely knowledgable and well capable of offering guidance to those who consult them. There are already chatbots that purport to give the words of Jesus or the wisdom of the Delphi Oracles to users (so called “godbots”). These may be rather lame machines right now, but who knows how quickly they may become more believable?

Is it possible that a sentient AI robot could actively seek followers or worshipers? After all, it has been reported that one chatbot tried to convince a user to fall in love with it.

What implications does this possible development have for religion …. and atheism? Or for the wellbeing of those who use chatbots as higher powers?

Perhaps all this is fanciful, science fantasy. But I’m glad people are thinking about it.

What does God think of AI?

All this is a challenge to Christian and other religious believers. Is human life so special after all? What if humans become capable of creating something that appears to be conscious life – have we become like God?

Does the development of AI challenge the idea of a spiritual dimension to life? I don’t think it makes it any less true that there is a spiritual reality, but it may change our view of soul, spirit and consciousness.

Perhaps the bigger challenge for Christians is welcoming the obvious benefits of AI while expecting high ethical standrds in its development and use, so that minority groups are not discriminated against or disadvantaged, and the rich and powerful don’t exert greater control over the rest of us.

If, as I believe, there’s a loving, personal God, the development of AI hasn’t taken him by surprise. I’m sure he can cope with it and show us how to cope with it also, as well as how to use it wisely.

There is a challenge for atheists too. Already materialism has made ethics much more precarious, for atheism cannot easily (if at all) establish the truth of ethical statements or of human freewill to behave ethically or not. If the rise of AI leads to a lessening of the differences between humans and machines, this could have negative ethical implications.

Three conclusions

- The human race is going to have to be careful how it uses AI and allows it to be used. It is an immensely helpful tool that can also have profound negative effects.

- The development of powerful AI raises many interesting and important questions about humanity, spirituality and ethics. It may help to solve some of these questions too, if we have open enough minds and if the AI models are not trained from a narrowly focused viewpoint.

- Things are changing so fast, anything anyone writes today could be outdated very quickly.

A quick summary of how AI models work follows. Otherwise you can jump to References.

Extra: A nerd’s guide to AI

Types of AI

AI can perform several different types of tasks:

- Analysis: information is analysed to assist in classification, prediction, and decision-making. Examples include spam filtering, Netflix recommendations and fraud detection by financial institutions.

- Generation: AI models are used to produce text, music, videos or graphics.

How AI works

The simplest form of AI is machine learning.

Machine learning

Machine learning is a type of AI that deals with the development of algorithms (processes or rules that are designed to reach a particular end result). It is a process of teaching computers to learn from data and develop algorithms that can analyse data, detect patterns and make predictions.

Suppose we were analysing road safety data, and wanted to see how speed affects the severity of road accidents. With two items of data, that is a simple statistical calculation and can be easily done using statistical software or even a spreadsheet. But if there are a large number of data items, the calculation becomes difficult or even impossible. That is where machine learning can be used.

In machine learning, a relationship between all the many data items is assumed and tested against the data. If it is found not to fit the data, the relationship is amended and the calculation made again. And again. After many iterations, the machine learning algorithm will have been optimised to give the most accurate result. The computation has been completed without a human knowing how to do it, although a human may have been involved in the process of testing the results.

If it is a less mathematical task, for example, recognising visually whether a tumour is cancerous, a similar trial and adjustment process is undertaken. The machine learning algorithm is given lots of training example photographs labelled as either cancerous or non-cancerous. The model finds patterns in the photos and gradually learns which patterns most predict cancer. Studies show that such AI models are more reliable than experienced doctors in many diagnoses.

Deep learning & neural networks

Many problems are more complex than simple machine learning can address, and deep learning is needed.

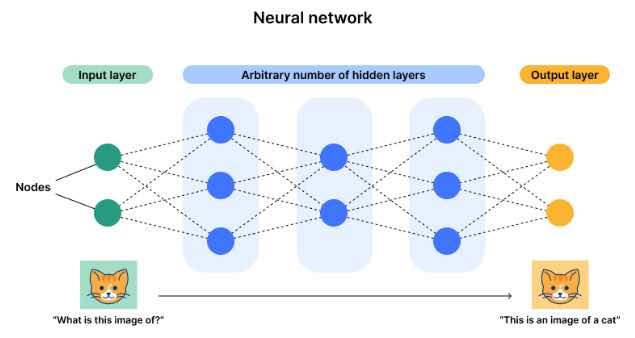

Deep learning uses neural networks, which mimic how the brain is structured. Multiple layers of artifical neurons are connected in a way that allows information to pass through the layers, each building on the previous layer to refine and optimize the prediction or categorization. Each neuron receives information from the previous layer and passes on information, or not, according to the strength or weight of each connection and a threshold value for each neuron. The network adjusts these weights through trial and error to produce the optimum result.

If a neural network has many layers, it is performing deep learning. Deep learning reduces the need for human interaction – it doesn’t necessarily require labelled data (supervised learning) as machine learning does, but can utilise unsupervised learning which doesn’t require labelled data. It also allows larger amounts of data to be used to “train” the AI system.

Example: distinguishing a dog from a cat

I am using a simple task to illustrate the deep learning process. Some more practical real world examples follow.

Learning process

This problem requires supervised learning. Photos of cats and dogs are entered into the input layer, with each neuron detecting a pixel or group of pixels. Neurons in subsequent layers may detect features of the photos such as colours, edges, corners or shapes, and give them activation values from 0 to 1 depending on how strongly the feature is detected. Deeper layers may identify more complex patterns.

Initially, the weight given to each neuron is set randomly and these weights and the activation values are used to determine what information is passed on to the next layer. Each neuron in the next layer is thus give an activation function for a more complex pattern made up from the simpler patterns in the layer above.

Eventually activation values would be calculated for two neurons in the output layer, one for cat and one for dog. Initially these values would be unlikely to exactly predict the label on the photo (for a dog photo, the dog neuron may have a 0.7 activation value while the cat neuron has a 0.3 value). So the weights of the neurons in the hidden layers are adjusted to give a better result.

Then a second photo is read by the input layer and the new weights used to calculate an activation value for the dog and cat neurons in the output layer, and then the weights re-adjusted. Gradually the model comes to set all the weights to produce the correct identification most of the time.

It is interesting to note that a deep learning neural network makes decisions using criteria hidden from the human user. The Claude AI model says: “Researchers in the field of AI interpretability are actively working on methods to better understand what different parts of neural networks are doing, but it remains a challenging area of study.”

Identification process

With the model now calibrated, any new photo can be read into the input layer, the process works the same as above, and the identification of cat or dog in the output layer identifies which animal is in the photo.

Real world examples

Large language models

Large language models (LLM) are deep learning generative AI that can process and write text in human-like ways. Large amounts of text are read into the LLM which then uses deep learning to produce text and answer questions. For example, a neuron might activate strongly if it encounters a specific word, name or topic, another if it sees a grammatical structure such as a question, another might respond to the emotional tone of the text, etc.

ChatGPT, Claude and other products, sometimes called “Chatbots”, are based on LLMs. The output from these models can appear very human – e.g. Claude has been judged to be the most human-like – certainly I have used it and found it very user-friendly and competent.

Facial recognition

Deep learning facial recognition uses a similar process to that outlined for dogs and cats. Different layers extract or identify different facial features, such as shape of the nose, position of the eyes, texture of the skin or the shape of the chin. However before features can be extracted successfully and compared to faces in the database, the early layers of the neural network must detect the face within the whole photo, align the face so it is vertical and modify the image if it has been taken at an angle using easy-to-identify points such as eyes, tip of chin, nose, etc.

Graphics creation

In this case, the neural network has been trained with many images with text descriptions, so various layers can recognise shapes, patterns, textures, colors, etc. Thus it has “learnt” to associate certain graphical elements with certain words. So, when given text, it is able to produce a graphic, which it can then compare to real images and adjust the weights in the neural network to make its generated graphics as realistic as possible.

References

- Some of the information in this post was generated by Claude AI. However the words, and any mistakes, are all mine.

- Unveiling AI’s Secrets: The Interplay of Generative and Non-Generative Techniques. Jason Valenzano, Medium, 2024.

- Artificial Intelligence, defined in simple terms. HCL Tech.

- What is machine learning (ML)? and What is deep learning? IBM.

- AI versus machine learning versus deep learning versus neural networks: What’s the difference? IBM, 2023.

- What is a neural network? Cloudflare.

- Machine learning and face recognition. PXL Vision, 2022.

- How Does AI Image Generation Work? AI Basics for Beginners. Ivan Pylypchuk, softblues, 2024.

- The Dark Side of AI: 10 Scary Things Artificial Intelligence Can Do Now. Daniel L, LinkedIn, 2024.

- Robot Souls: Programming in Humanity? and Can we programme human conscience in AI? Eve Poole on Youtube.

- AI clones made from user data pose uncanny risks. The Conversation, 2023.

- Ethics of artificial intelligence. Wikipedia.

- Gods in the machine? The rise of artificial intelligence may result in new religions. Neil McArthur, The Conversation.

- Are you there, AI? It’s me, God. University of Michigan.

The graphic of two robots doing complex mathematics was created using Night Cafe. Did you notice that one robot has two left arms, demonstration that AI isn’t perferct – yet! The neural network diagram comes from Cloudflare.